[smooth=id:1 ; width:900; height:700]

Click on the arrows to page through the tutorial on the statistical concepts of specificity and sensitivity. In my experience, people get confused when they try … Read the rest

[smooth=id:1 ; width:900; height:700]

Click on the arrows to page through the tutorial on the statistical concepts of specificity and sensitivity. In my experience, people get confused when they try … Read the rest

The purpose of inferential statistics is to predict differences between groups in the general population by measuring the difference in a small sample.

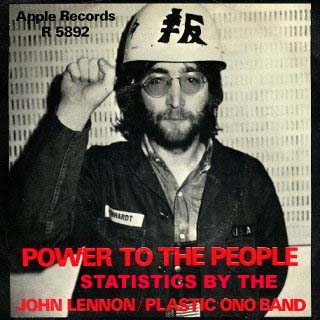

OK, so John Lennon didn’t really write this , but statistical power is a very abstract concept and the ability to “imagine” really helps.

Power is the probability … Read the rest

Definition:The Number Needed to treat is the number of patients that you would need to treat to prevent one primary outcome (heart attack, death, stroke, whatever)

Albert and I developed an acute interest in risk reduction at about 3500 feet.

Examples:

Example 1A:

Pickles are associated with all the major diseases of the body. Eating them breeds war and Communism. They can be related to most airline tragedies. Auto accidents are caused by … Read the rest